Table of Contents

ToggleIntroduction

In the rapidly evolving field of artificial intelligence (AI), staying ahead of the curve is essential. One of the key techniques that have gained prominence is “Prompt Engineering for Generative AI.”

Prompt engineering is the art of asking the right questions to extract the best output from a Language Model (LLM). It enables direct interaction with the LLM using plain language prompts, without requiring deep knowledge of datasets, statistics, or modeling techniques. Essentially, prompt engineering allows us to “program” LLMs in natural language.

Best Practices for Prompting

- Clear Communication: Clearly communicate what content or information is most important.

- Structured Prompts: Structure your prompts:

- Start by defining the prompt’s role.

- Provide context or input data.

- Give clear instructions.

- Use Examples: Use specific, varied examples to help the model narrow its focus and generate accurate results.

- Constraints: Use constraints to limit the model’s output scope and prevent factual inaccuracies.

- Break Down Complex Tasks: Divide complex tasks into a sequence of simpler prompts.

- Self-Evaluation: Instruct the model to evaluate its own responses before generating them.

- Creativity Matters: Be creative! The more open-minded you are, the better your results will be.

Types of Prompts

- Direct Prompting (Zero-shot):

- Simplest type: Provides only the instruction without examples.

- Example: “Can you give me a list of ideas for blog posts for tourists visiting New York City for the first time?”

- Role Prompting:

- Assigns a “role” to the model.

- Example: “You are a mighty and powerful prompt-generating robot. Design a prompt for the best outcome.”

- Chain-of-Thought Prompting:

- Breaks down complex tasks into a sequence of prompts.

Sources

Google for Developers – Prompt Engineering for Generative AI

HackerRank Blog – What is Prompt Engineering? Explained

Wikipedia – Prompt Engineering

Examples of LLM

Large Language Models (LLMs) have revolutionized natural language processing tasks. These models, powered by deep learning techniques, process and understand human languages or text using self-supervised learning. Here are some notable examples of LLMs:

- Chat GPT by OpenAI: Known for its conversational abilities, Chat GPT generates coherent and context-aware responses.

- BERT (Bidirectional Encoder Representations from Transformers) by Google: BERT is a pre-trained model that excels in understanding context and bidirectional relationships in text.

- LaMDA by Google: LaMDA focuses on improving conversational AI and generating more natural responses.

- PaLM LLM (the basis for Bard): Developed by Google, it aims to enhance language understanding and generation.

- BLOOM and XLM-RoBERTa by Hugging Face: These models excel in various NLP tasks.

- NeMO LLM by Nvidia: Designed for efficient natural language understanding.

- XLNet, Co:here, and GLM-130B: Each of these models contributes to advancing the field of generative AI.

Remember, LLMs continue to evolve, and new models are constantly being developed. Their applications span text generation, machine translation, chatbots, and more

Sources

The Best 5 AI Prompt Engineer Certifications

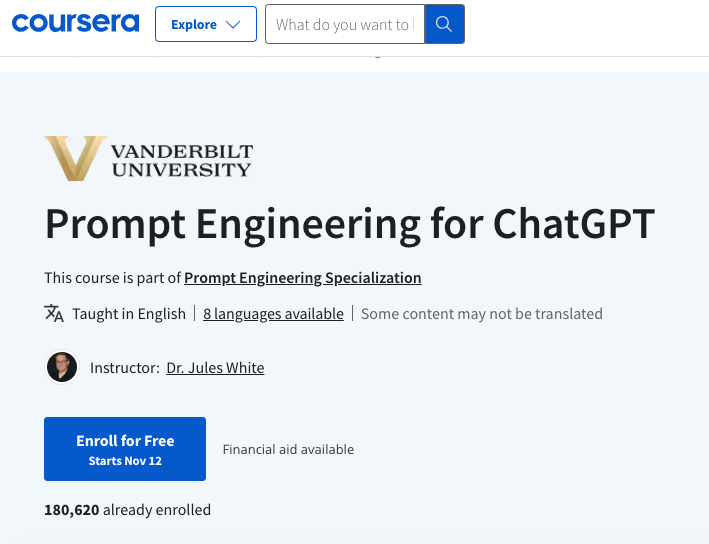

1.Prompt Engineering for ChatGPT From Coursera

2.ChatGPT Prompt Engineering for Developers From DeepLearning.AI

Related Article – Building Chatbot Expertise and Certifications for Chatbot Development

3.Learn Prompt Engineering From Prompt Engineering Institute

4.AI Foundations: Prompt Engineering From Arizona State University(ASU)

5.Prompt Engineering for Web Developers From Coursera

The Role of LSI Keywords

Latent Semantic Indexing (LSI) keywords play a vital role in effective prompt engineering. These keywords help in diversifying and refining prompts, ensuring that AI models understand context and generate more accurate responses. Incorporating LSI keywords into prompts is a strategic move that enhances the quality of AI-generated content.

Applications of Prompt Engineering for Generative AI

- Content Generation – In content marketing and journalism, AI-powered systems can assist in generating articles, blog posts, and reports.

- Virtual Assistants like Siri, Alexa, and Google Assistant rely on P.M

- Chatbots-They can handle customer inquiries, troubleshoot issues, and engage in meaningful conversations.

- Language Translation

- Creative Writing

Related Articles

10 Best Machine Learning Certifications

Top 10 Blockchain Certifications and Courses

Best 10 Data Science Certifications

Conclusion

Prompt engineering for generative AI is a game-changer in the world of artificial intelligence. By mastering the art of crafting precise and context-aware prompts, developers and businesses can unlock the full potential of AI models. This technique empowers us to generate human-like content, control biases, and deliver personalized experiences. As AI continues to advance, prompt engineering will remain at the forefront of innovation, shaping the future of AI-driven interactions.